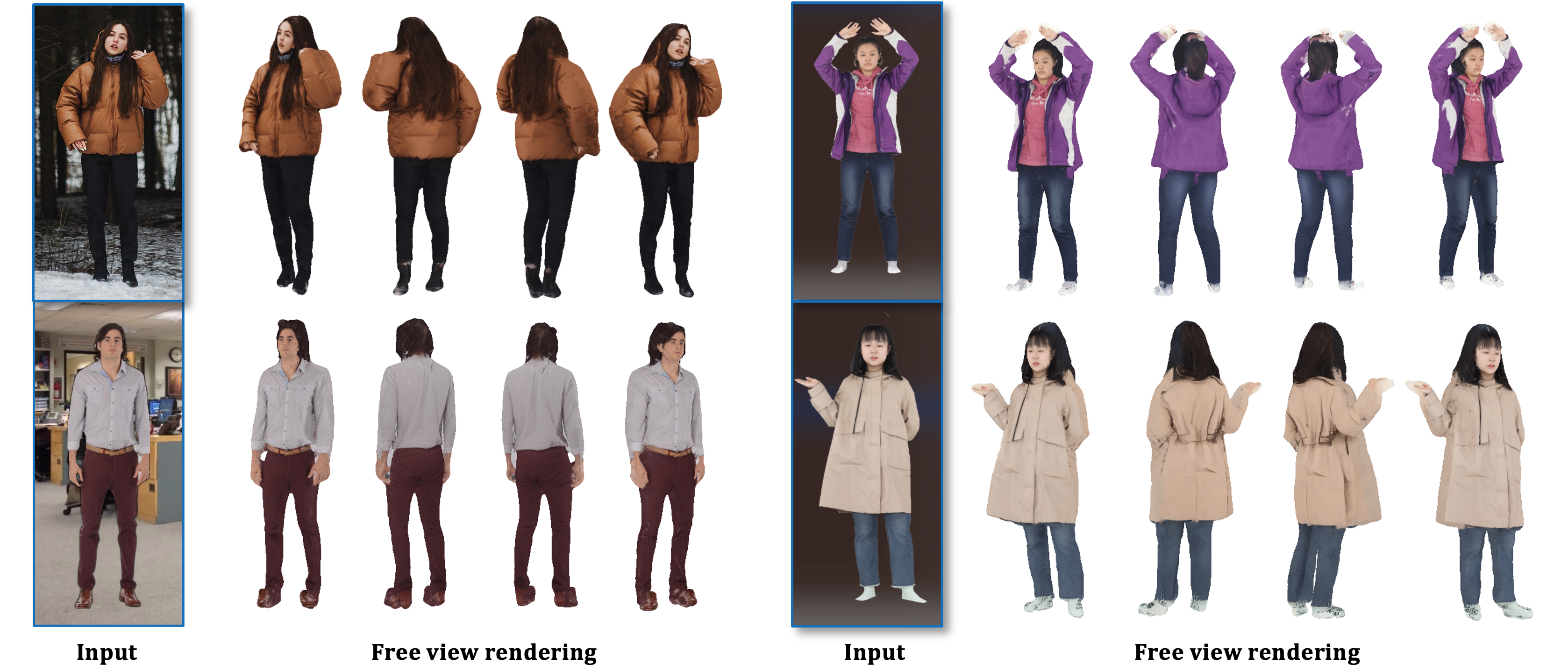

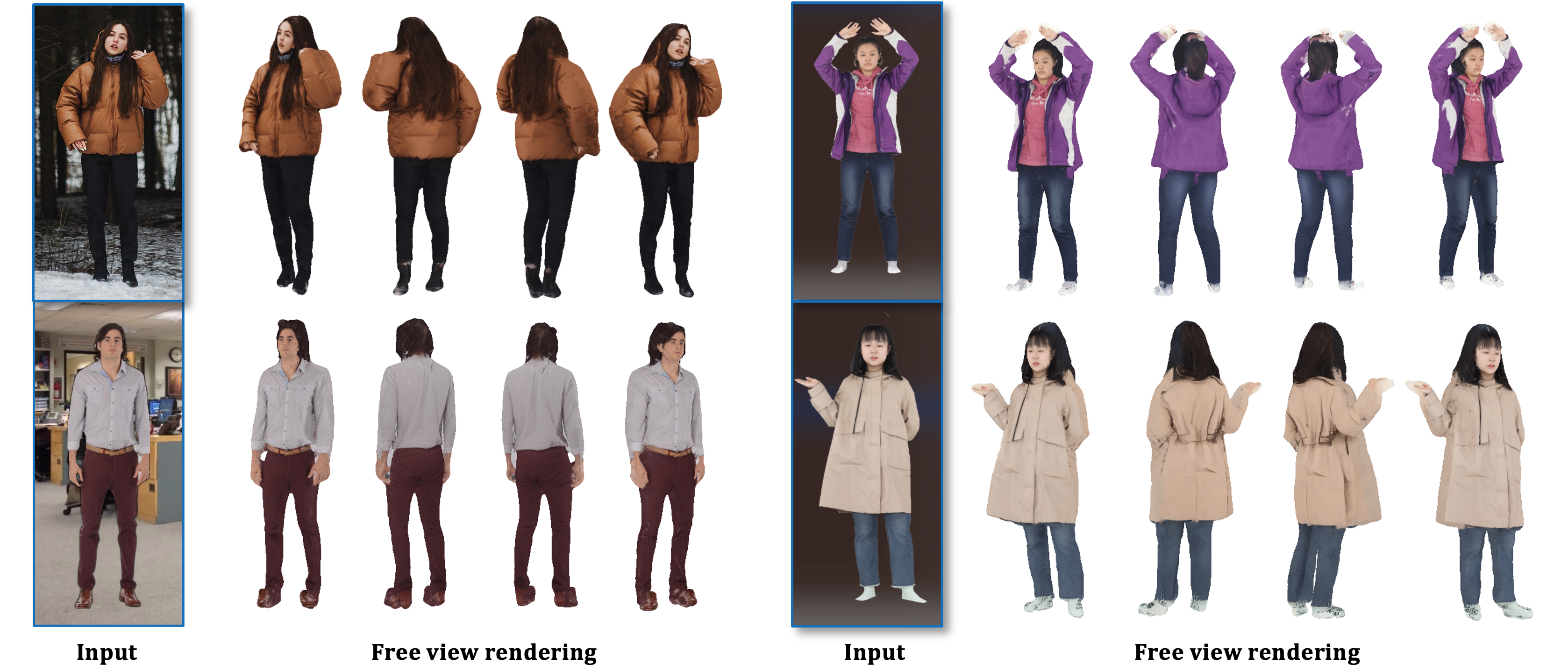

TL;DR: ConTex-Human is a single image to 3D Human model that enables texture-consistent and high-fidelity free view human rendering.

In this work, we propose a method to address the challenge of rendering a 3D human from a single image in a free-view manner.

Some existing approaches could achieve this by using generalizable pixel-aligned implicit fields to reconstruct a textured mesh of a human

or by employing a 2D diffusion model as guidance with the Score Distillation Sampling (SDS) method, to lift the 2D image into 3D space.

However, a generalizable implicit field often results in an over-smooth texture field, while the SDS method tends to lead to a texture-inconsistent novel view with the input image.

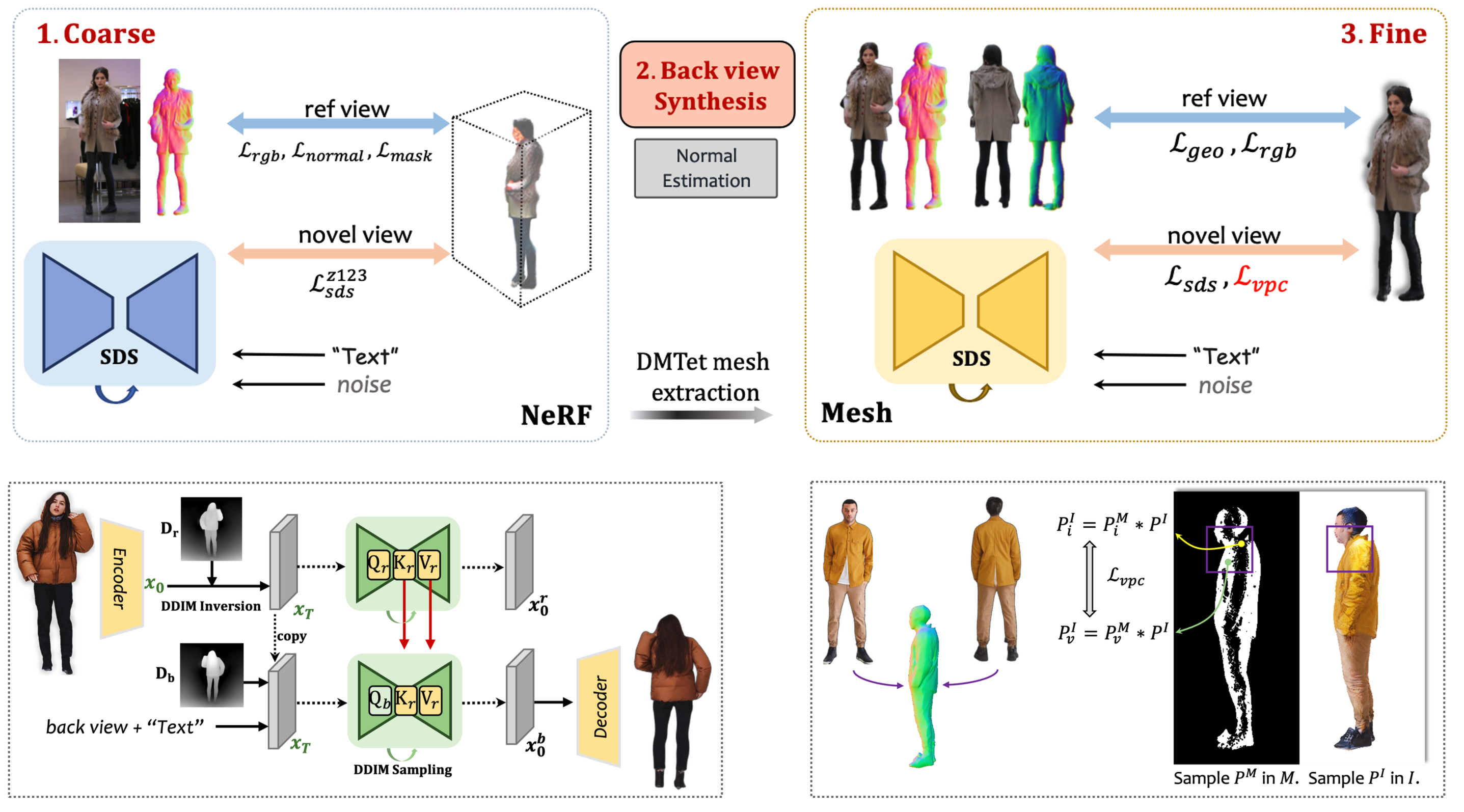

In this paper, we introduce a texture-consistent back view synthesis module that could transfer the reference image content to the back view through depth and text-guided attention injection.

Moreover, to alleviate the color distortion that occurs in the side region, we propose a visibility-aware patch consistency regularization

for texture mapping and refinement combined with the synthesized back view texture. With the above techniques, we could achieve high-fidelity and texture-consistent human rendering from a single image.

Experiments conducted on both real and synthetic data demonstrate the effectiveness of our method and show that our approach outperforms previous baseline methods.

Our framework is composed of three main stages. (1)Coarse Stage. Given a human image as reference, we leverage view-aware 2D diffusion model Zero123 to conduct (SDS) to optimize a NeRF. (2)Back view Synthesis Stage. Coarse image and depth map are utilized to generate texture-consistent and high-fidelity back view. (3)Fine Stage. We convert NeRF to DMTet Mesh and optimize mesh with front/back normal map. Texture field is optimized with front/back image, Zero123 / Stable-Diffusion SDS, and visibility-aware patch consistency regularization.

@misc{gao2023contexhuman,

title={ConTex-Human: Free-View Rendering of Human from a Single Image with Texture-Consistent Synthesis},

author={Xiangjun Gao and Xiaoyu Li and Chaopeng Zhang and Qi Zhang and Yanpei Cao and Ying Shan and Long Quan},

year={2023},

eprint={2311.17123},

archivePrefix={arXiv},

primaryClass={cs.CV}

}